I went to go make a public library card the other day. After filling out some forms, the nice man at the counter punched my information into the computer, made a few button clicks, and reached under the counter. He pulled out a library card.

I was instantly transported back to a time when I couldn't see counter tops. When I was a kid, my mom had a public library card on her keychain. And now, as an adult, I had a public library card on my keychain too. I signed the back, popped the card out of its cutout template, and wrestled the card onto my keychain. As my keys jangled and jingled on my walk back home, I thought about how time leaves traces on everything - even on something as trivial as a keychain.

Why have I rarely felt such a time-travelling experience in a digital medium? Why does it feel like everything gets lost into the digital void? How can something as trivial as a keychain evoke such a rich emotional experience, but reading the tweets of a few hundred people cannot?

In order to build richer digital experiences, we need to enforce two principles: append-only and uniqueness-by-default.

Time is append-only, which means that you can only ever add things; you can't go back and make edits or delete things. Half of grappling with the human condition is about wrestling with time's being append-only.

But being append-only bestows virtues, too. Events are rarely obliviated completely. As such, the physical things that "feel" beautiful make an extra effort to ensure that time can leave its mark as honestly as possible. It's not the physicality itself that makes the thing beautiful, but rather its potential to preserve the time goneby as honestly as possible.

Ignoring technological constraints, what if digital interfaces were append-only, and actually encouraged to be so?

Twitter has a great example: the infamous inability to edit tweets. Although tweets can be deleted and re-written, preventing users from editing past tweets incentivizes users to treat Twitter more like a platform for honest stream-of-consciousness than life updates and showing off to your friends. No one will delete a tweet just to re-write it from scratch and fix a typo. * Unless, of course, you're a company account where the stakes of a typo are higher. I'm talking about normal, everyday individuals here.

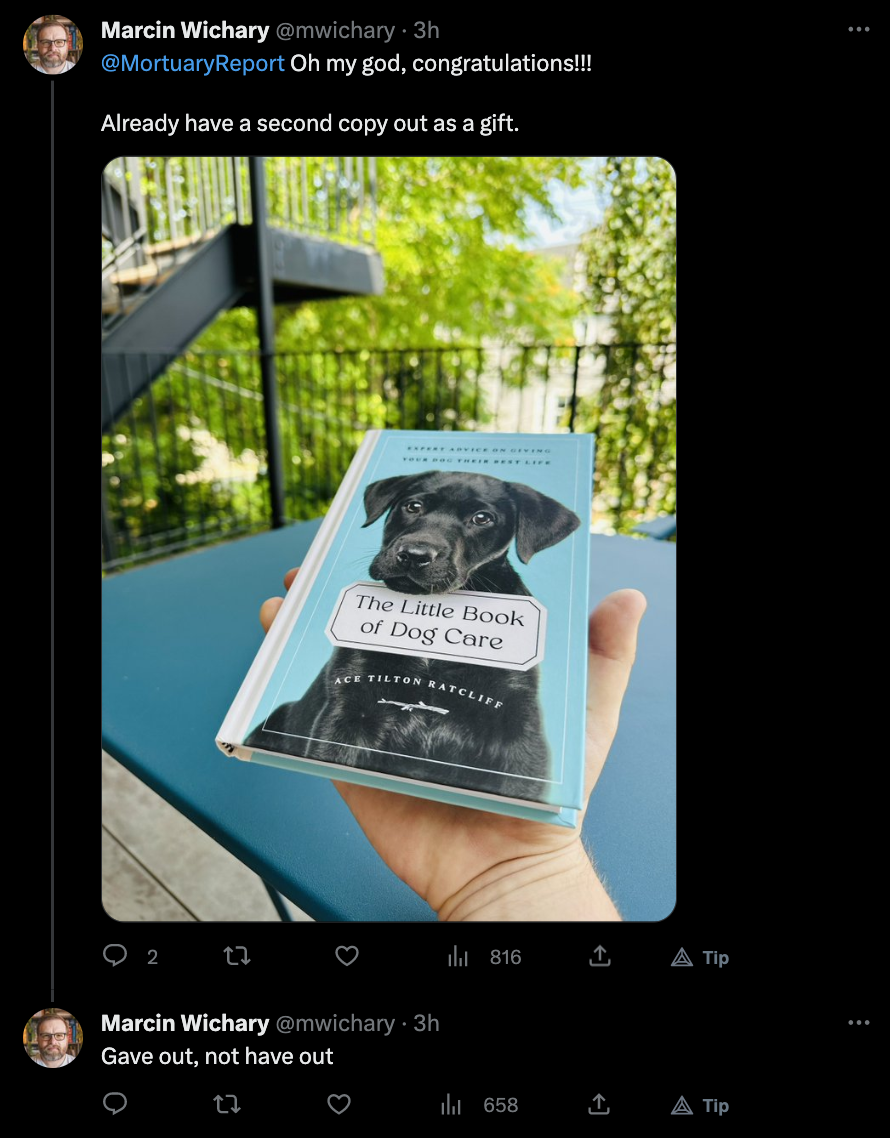

Look at this tweet from Marcin Wichary:

Instead of deleting the entire tweet, he just appended an additional tweet. Doesn't that feel more... realistic? More honest? Twitter is able to provide a fantastic canvas for voices because you can literally see words and ideas taking shape.

This append-only principle is easy enough to incorporate into daily life. For instance, below is a small demo of a personal journaling tool that doesn't allow backspace. The initial feeling is, of course, annoyance. But after using it for my past few reflections, my journal entries appear to be more "alive" - just because all the typos are right there to see, in the flesh. Readability isn't an issue either; on the fly, I create little cues to inform my future self of what I meant to type (see the parentheticals in the demo).

The current incarnation of my note-taking workflow also expounds on the append-only principle. I send quick notes to my Telegram bot, and it appends these notes to a Markdown file that I pull down from my personal server at the end of the day. There's no mechanism for me to delete or edit my notes unless I access my server.

Adopting systems that are append-only have felt much better for me. There's a certain comfort in knowing how my thoughts evolve over time - they don't feel as if they've been rudely abducted from time. The stakes feel lower because I visually see that "perfection" wasn't accomplished in one go, but with multiple iterations over an initially imperfect thing.

Append-only isn't enough, however; it really only tells you a general, global principle to adhere to. We need to look at the local minutiae.

Photographer Sally Mann has a remarkable quote in her book, Hold Still: "When an animal, a rabbit, say, beds down in a protecting fencerow, the weight and warmth of his curled body leaves a mirroring mark upon the ground. The grasses often appear to have been woven into a birdlike nest, and perhaps were indeed caught and pulled around by the delicate claws as he turned in a circle before subsiding into rest. . . Each of us leaves evidence on the earth that in various ways bears our form."

In the physical world, mirroring marks are unique-by-default. * Onfim was this ancient kid that etched his doodles into birch bark. We still have his etchings - how incredible is that?

But current digital experiences enforce uniformity-by-default. Every post on Facebook, Instagram, Twitter - they all look more-or-less the same. Your profile and your name, unfortunately, aren't enough to be differentating factors - it's like going to a neighborhood and seeing the multiples of the same house lined up on the street, with the only difference being the house numbers. The interior design of every house is the same too - just the color of the furniture and the photos in the photo albums are different.

Uniformity-by-default incentivizes people to try and stand-out in the crowd. There's no question why these experiences are anxiety-inducing when the only real differentiating factor is proof-of-social-capital (e.g. likes, retweets, etc).

On the other hand, think about public bathroom walls and those little slips of paper that you use to test out pens at the stationary store. What do they have in common? They're both mediums where uniformity is close to impossible - you can't help but write in your own handwriting, and your handwriting alone is expressive enough to differentiate you from hundreds of other strangers.

In order to embrace uniqueness-by-default, we need two things: (1) a medium that is open-ended enough to support non-uniform expression and (2) tools that that are expressive enough to transfer uniqueness into the medium.

Luckily, AI appears to be adequate for (2).

Let me give a brief overview of how machine learning models think. * This definitely doesn't apply to all models, mind you. They take information and project it into what we call the latent space. The latent space is essentially a numeric compression of whatever information was fed to the model.

The latent spaces for recent large language models are extremely expressive. Thus generative language models perform a kind of digital alchemy: compressing the unquantifiable into a quantifiable form, and, conversely extracting the unquantifiable out of the quantifiable.

This suggests that language models can be used as "universal APIs", as patio11 noted. We can insert ourselves into the digital world more naturally, and we'll leave the models to deal with the technological plumbing in between. Our ways of interacting with digital worlds haven't changed since the 1970s, but maybe we won't have to be dumbed down to using just a keyboard and a mouse in the near future.

It's also important to note here that language is just an interface. At some point, we learn to speak, and then to read, and then to write. But there's a whole slew of meta-data that we impress onto the world before we start speaking our first words. I'm always surprised when I watch young kids; each and every one expresses their personalities in such unique and rambunctious ways, it's nuts. Some dance, some sing, and some throw their Lego blocks at people.

Imagine a multi-modal model that can take all these forms of expression - verbal and non-verbal alike, speaking, drawing, dancing, the clothes you wear, etc. - and concoct them into a personalized ink that can be used freely on digital canvases: a digital experience where I can tell who you are without a profile picture and a name.

We don't need more bicycles for the mind. I want a digital pencil for the mind - the thinnest possible layer between me and the digital me. While the bike that you ride dictates the style in which you pedal, a pencil is hardly opinionated. How many times you have had a conversation like this: "How'd you know I wrote that?" "I recognized your handwriting."

In order to illustrate my idea for rich digital experiences, take a look at this small tool that I made to see how the text-embedding-ada-002 model thinks. The model maps sentences into 1536-dimensional space, and I do further transformations to project 1536-dimensional vector down to a 3-dimensional color space. In the demo below, text-embedding-ada-002 thinks that "anger" and "sadness" are both blue-ish green, whereas "surprise" is a yucky yellow.

What if the model wasn't text-embedding-ada-002, but a model trained on your own personal writing, actions, speech, etc.? Then theoretically, the model would express in such a way that is, by default, unique. Of course, the demo above with colors can hardly be called "expression", but I can imagine having personal "stickers" that are generated from your personal joint image-text latent space (e.g. CLIP). In essence, the model would be a compressed version of you and what you impress onto the world.

My man, Marshall McLuhan: The medium is the message.

I don't know what a new medium for AI-supported uniqueness-by-default might look like. But this is definitely an exciting time: maybe creating humanistic, beautiful, timeless experiences will become easier than ever. I hope they do.